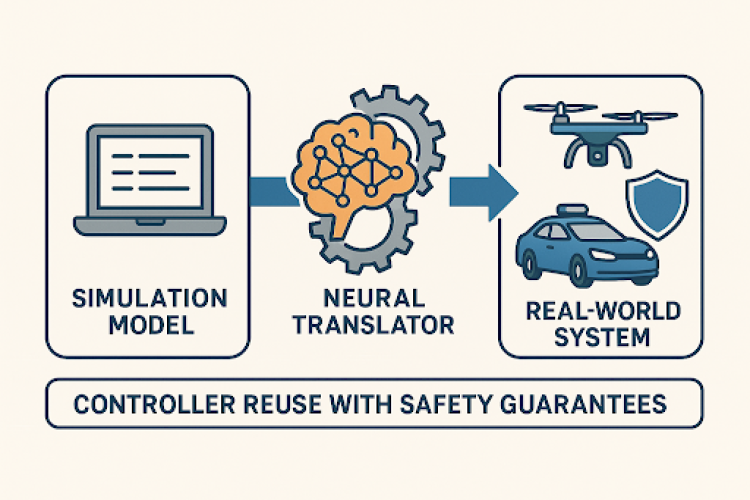

AI ‘translator’ would seamlessly transfer autonomous vehicle control logic from simulations to real world

Building the “brain” that guides a self-driving car or other autonomous vehicle is challenging enough. Ensuring this digital pilot remains reliable when moving from clean computer simulations to the messy unpredictability of the real world adds another layer of complexity.

The CU Boulder Hybrid Control Systems Lab has a solution – one so innovative that it was recognized as one of the five most disruptive ideas at the 2nd International Conference on Neuro-symbolic Systems and awarded a $100,000 grant from the Defense Advanced Research Projects Agency.

The paper, titled Stochastic neural simulation relations for control transfer, was authored by PhD student Alireza Nadali with associate professors Majid Zamani and Ashutosh Trivedi.

Traditionally, engineers have to redesign their control systems from scratch every time they switch to a different autonomous vehicle or environment.

“We propose a smarter, simpler solution: an AI ‘translator’ that smoothly transfers control logic from simulations to real-world applications,” said Nadali.

He explained that the translator is built from neural networks that learn how the predictable, trusted simulation relates to the complexities of a real-world vehicle.

Once trained, it enables any controller designed and proven in the simulation to work immediately on the physical system. The translator adjusts control signals in real time, ensuring that the behavior of the actual vehicle closely matches the simulated model.

“Unlike traditional trial-and-error approaches, our translator is grounded in rigorous mathematics, offering clear, probabilistic guarantees,” said Zamani, who leads the Hybrid Control Systems Lab. “It ensures the real vehicle's behavior consistently mirrors the simulation within a predefined margin of error and eliminates the need for costly and time-consuming post-deployment verification.”

By merging the flexibility and efficiency of deep-learning AI with the precision of formal verification methods, the approach significantly reduces development time, allowing engineers to reuse controllers across different vehicles and scenarios confidently.

The team’s work aligned with the broader goals of the conference, which aims to bring together novel concepts, theories and practices to advance the science and application of neuro-symbolic computing. The conference emphasizes the design, analysis and synthesis techniques for neuro-symbolic systems, with particular interest in assurance metrics, robustness and ease of translation from design to production.

“In essence, our work bridges the gap between AI-driven control and formal, mathematically backed guarantees, bringing safer, faster and more efficient autonomous systems within practical reach,” said Trivedi, principal investigator in the Programming Languages and Verification Lab.