Student Work

Student work is connected to the goals of this course as follows. With respect to the first goal of exploring the intersection of AI and Neuroscience, we read and discussed a series of papers representing cutting-edge work in the intersection, such as “Deep Learning Methods to Process fMRI Data and Their Application in the Diagnosis of Cognitive Impairment: A Brief Overview and Our Opinion.” by Wen et al. (2018) and “Designing Implicit Interfaces for Physiological Computing: Guidelines and Lessons Learned Using fNIRS.” by Treacy Solovey et al. (2015). With respect to the goal of providing hand-on learning activities based on real-world datasets, students work with the data from the Infant Development, Emotion, and Attachment (IDEA) project. With respect to the third goal of identifying ethical issues, we read and discussed seminal papers such as “Neuroethics: the ethical, legal, and societal impact of neuroscience.” by Farah (2012).

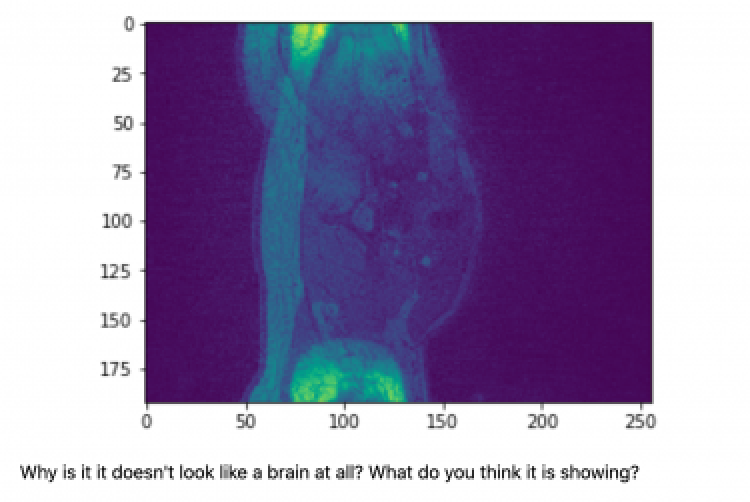

Hand-on learning activities take the form of programming assignments using the Jupyter notebook. Each week, students are provided with a set of new Jupyter notebook templates, which include tutorial code students can run as well as coding assignments student must complete independently. The primary method to assess coding assignments is through peer evaluation. During our class meetings, students take turns as the presenter to share their Jupyter notebooks, which contain their solutions to the coding exercises of the week. Each student goes over a couple assignments and discusses the underlying AI or neuroscience concepts, while receiving feedback from other students and the instructor.

Another indicator of students’ increase in performance is decreasing need of scaffolding. Since our students have background in computer science, neuroscience concepts are relatively new to them. Most had not read a paper on brain imaging. Thus, initially, prompt questions are provided to guide students through a paper. But gradually, as students became more comfortable, they relied less on prompt questions. At one point in the semester, we discussed as a class whether prompt questions would still be needed. Students reached a consensus that prompt questions would not necessary. In other words, students’ no longer needed scaffolding to read papers about neuroscience; they demonstrate increased independence in terms of reading papers in a new field (neuroscience) and their ability to connect to AI concepts.

See Examples of Assignments here.

Compared to the pilot of this course several years ago, a shift in performance was observed along the technical dimension. The original pilot course was mostly conceptual with very little technical learning such as having a coding assignment. In this course, students had a chance to develop algorithms and write computer code to analyze real brain imaging data and build machine learning models. The shift toward higher technical sophistication has been effective; students have been able to complete their assigned coding exercises as well as to share and discuss their solutions during the class.