Sandra Bae, ATLAS PhD Student, Awarded at VIS 2023

Sandra Bae, PhD student and member of the Utility Research Lab and ACME Lab at ATLAS, has been honored with a Best Paper Honorable Mention at VIS 2023 for her research on network physicalizations.

Billed as “the premier forum for advances in theory, methods and applications of visualization and visual analytics”, VIS 2023 will be held in Melbourne, Australia, from October 22-27, and is sponsored by IEEE. The Best Papers Committee bestows honorable mentions on the top 5% of publications submitted.

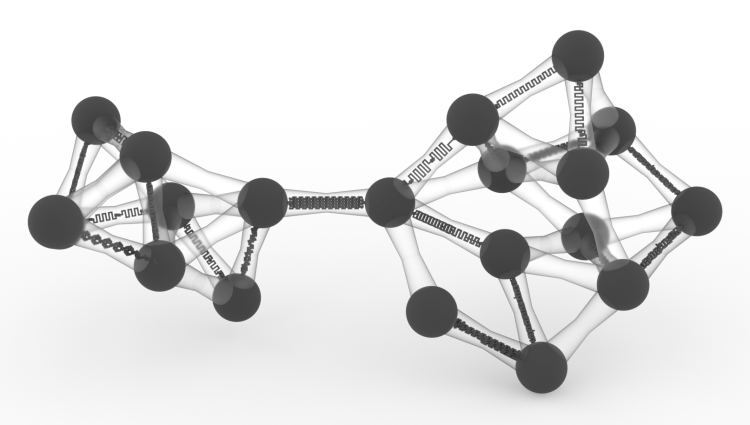

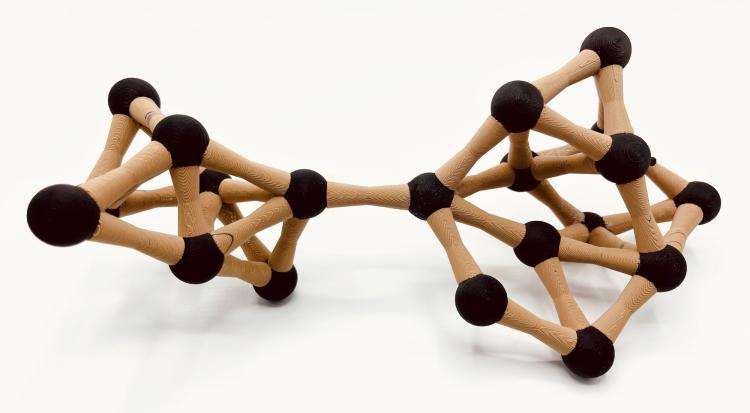

The paper introduces a computational design pipeline to 3D print physical representations of networks enabling touch interactivity via capacitive sensing and computational inference.

[video:https://youtu.be/uv0Yu0WUeSQ]

A Computational Design Process to Fabricate Sensing Network Physicalizations

S. Sandra Bae, Takanori Fujiwara, Anders Ynnerman, Ellen Yi-Luen Do, Michael L. Rivera, Danielle Albers Szafir

Abstract

Interaction is critical for data analysis and sensemaking. However, designing interactive physicalizations is challenging as it requires cross-disciplinary knowledge in visualization, fabrication, and electronics. Interactive physicalizations are typically produced in an unstructured manner, resulting in unique solutions for a specific dataset, problem, or interaction that cannot be easily extended or adapted to new scenarios or future physicalizations. To mitigate these challenges, we introduce a computational design pipeline to 3D print network physicalizations with integrated sensing capabilities. Networks are ubiquitous, yet their complex geometry also requires significant engineering considerations to provide intuitive, effective interactions for exploration. Using our pipeline, designers can readily produce network physicalizations supporting selection-the most critical atomic operation for interaction-by touch through capacitive sensing and computational inference. Our computational design pipeline introduces a new design paradigm by concurrently considering the form and interactivity of a physicalization into one cohesive fabrication workflow. We evaluate our approach using (i) computational evaluations, (ii) three usage scenarios focusing on general visualization tasks, and (iii) expert interviews. The design paradigm introduced by our pipeline can lower barriers to physicalization research, creation, and adoption.

Bae describes potential use cases for sensing network physicalizations:

- Accessibility visualization - Accessible visualizations (e.g., tactile visualizations) focus on making data visualization more inclusive, particularly for those with low vision or blindness. However, most tactile visualizations are static and non-interactive, which reduces data expressiveness and inhibits data exploration. This technique can create more interactive tactile visualizations.

- AR/VR - Most AR/VR devices use computer vision (CV), but most devices using CV cannot reproduce the haptic benefits that we naturally leverage (holding, rotating, tracing) with our sense of touch. Past studies confirm the importance of tangible inputs when virtually exploring data. But creating tangible devices for AR/VR requires too much instrumentation to make them interactive. Our technique would enable developers to more easily produce fully functional, responsive controllers right from the printer within a single pass.

The work continues as Bae plans to pursue more complex designs and richer interactivity including:

Fabricating bigger networks - The biggest network Bae has 3D printed so far is 20 nodes and 40 links, but this is rather small for most network datasets. She will scale this technique to support bigger networks.

Supporting output - Interactive objects receive input (e.g., from touch) and produce output (e.g., light, sound, color change) in a controlled manner. The sensing network currently addresses the first part of the interaction loop by responding to touch inputs, but she next wants to explore how to support output.

Bae showcased this research along with fellow ATLAS community members at the Rocky Mountain RepRap Festival earlier this year. We’re excited to see where her innovative research leads next.