Minority Report-inspired interface allows us to explore time in new ways

Imagine a scene—a bird feeder on a summer afternoon, the dark of night descending over the Flatirons, a fall day on a university campus. Now imagine moving backwards and forwards through time on a single aspect of that setting while everything else remains. One ATLAS engineer is building technology that lets us experience multiple time scales all at once.

David Hunter developed Proteus with expertise from ACME Lab members Suibi Weng, Rishi Vanukuru, Annika Mahajan, Ada Zhao and Leo Ma, and advising from professor and lab director Ellen Do.

Brad Gallagher and Chris Petillo in the B2 Center for Media, Arts and Performance provided critical technical support to make the project come alive in the B2 Black Box Studio.

“Proteus: Spatiotemporal Manipulations” by David Hunter, ATLAS PhD student, in collaboration with his ACME Lab colleagues, allows people to simultaneously observe different moments in time through a full-scale interactive experience combining video projection, motion capture, audio and cooperative elements.

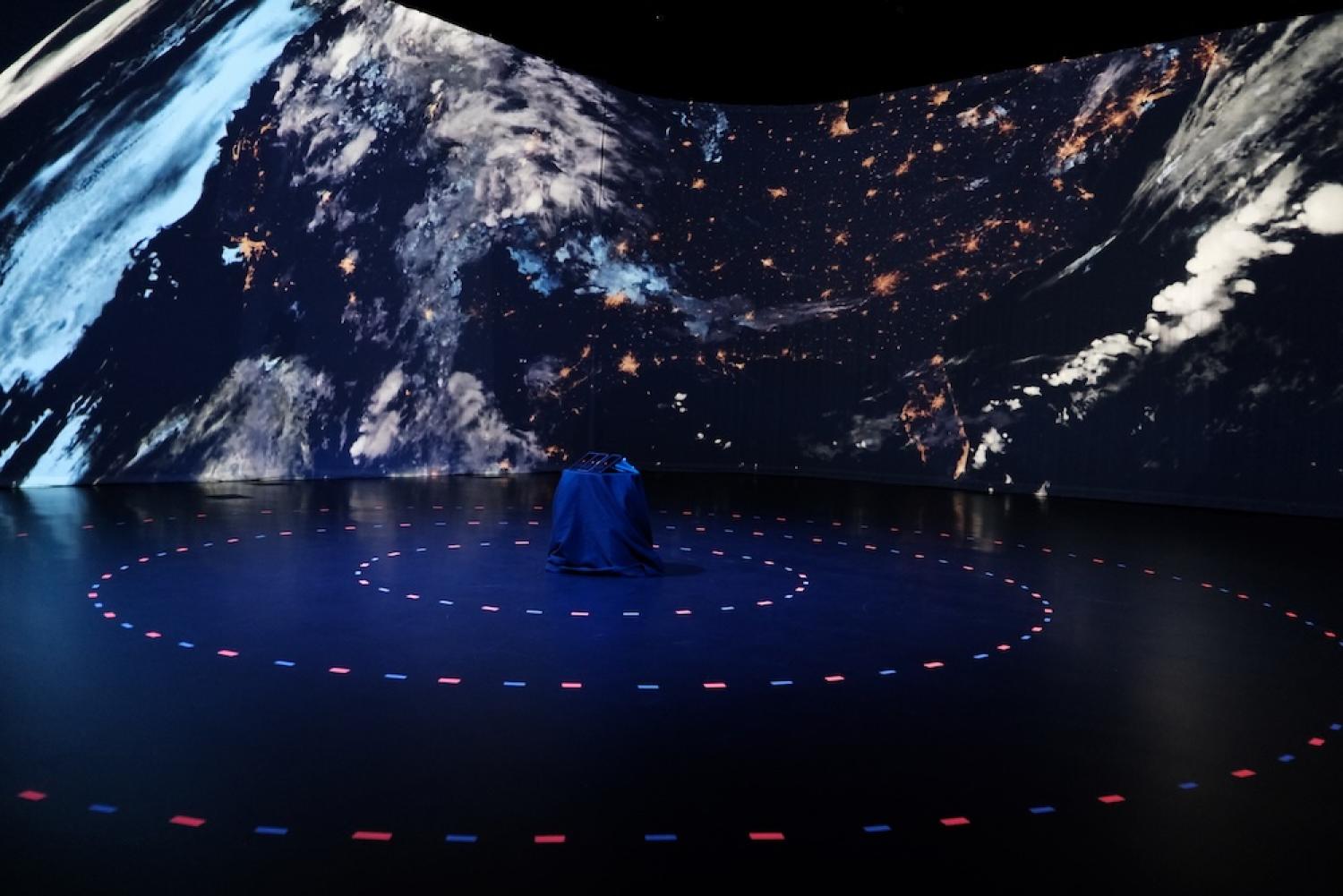

The project was shown through the creative residency program in the B2 Center for Media, Art and Performance at the Roser ATLAS Center. Housed in the B2 Black Box Studio, the project team used 270-degree video projections, a spatial audio array and motion capture technology, creating an often larger-than-life way to explore many different time scales at once. Simultaneous projections highlighted the lifecycle of a bacteria in a petri dish, a day in the life of a street corner on campus, and weather patterns at a global scale among other vignettes.

This tangible manipulation of time within a space can feel disorienting at first. It takes a while to adjust to what is happening, a unique sensation that has an expansive, almost psychedelic quality. But it may also have practical applications.

We spoke to Hunter about the inspiration behind Proteus, possible use cases and what comes next.

This Q&A has been lightly edited for length and clarity.

Several people can explore different moments in time simultaneously.

Tell us about the inspiration for Proteus.

There are scenes and settings where you want to see a place or situation at two distinct time frames, and for that comparison to not necessarily be hard-edged or side by side. You want it to be interpolated through time, so you can see patterns of change in space over that time.

Describe the early iterations of this project.

It was originally a tabletop setup with projection over small robots, and you could manipulate the robots to produce similar kinds of effects. There was a version running with a camera giving you a live video feed, but we switched it to curated videos as it was easier to understand what was happening with time manipulation. You're potentially making quite a confusing image for yourself, so the robots gave you something tangible to hold on to.

How did it evolve into the large-scale installation it is now?

We thought it would be interesting to see this at a really large scale in the B2, and the creative residencies made that possible. That's when we moved away from robots and it was like, “Well, how would you control it in this sort of space?”

I took a projection mapping course last semester and worked on a large-scale projection, but then we changed it to hand interaction—gesture-based in the air, kind of like “Minority Report.”

As you closer or farther from the screen, you change the time scale of part of the scene.

How do you describe what takes place when people interact with Proteus?

The key is interaction—people can actually control the time lapse. Usually, time lapses are linear, not two dimensional, and we don't have control over them. Here, you can focus on what interests you across different time periods, or hold two points in time side by side to see patterns and relationships as they change across space and time. This also enables multiple visitors to find the things they are interested in; there isn't one controller of the scene, it is collaborative.

What are some of the use cases you’ve thought about for this technology?

Anything with a geospatial component—a complex scene where many things are happening at once. You might want to keep track of something happening in the past while still tracking something else happening at another time.

You can use these portals to freeze multiple bits of action or set them up to visualize where things have gone at different points in time and space.

It's always been about collaboration—situation awareness where lots of people are trying to interrogate one image and see what everyone else is doing at the same time.

Then there’s film analysis: Can we put in a whole movie and perhaps you can find interesting relationships and compositions around that? This could be a fun way to spatially explore a narrative, too.

We're also looking at how it could be used for mockup design. Let's say you're prototyping an app and you have 50 different variations. You could collapse those all into one space, interrogate them through “time,” then mix and match different portions of your designs to come up with new combinations. It also works with volumetric images like body scans, where we swap time for depth.

What are the creative influences that drove the visual style of the piece?

I've long been interested in time lapses, like skateboard photography where multiple snapshots are overlaid on the same space as a single image.

There's all this work by Muybridge and Marey, who invented chronophotography. That's how they worked out that horses leave the ground while they run.

David Hockney did a ton of Polaroid work. There's a famous one of people playing Scrabble, shot from his perspective. All these different Polaroids are stuck down next to each other, not as a true representation of space but as a way of capturing time within that space—breaking the unity of the image.

Khronos Projector by Alvaro Cassinelli kicked off this research sprint and prompted me to look back at my interest in time and photography.

Several time lapse videos are projected simultaneously on the Black Box Studio's 270-degree screen.

Proteus is controlled with an app on a mobile phone modified to work with motion capture.

It does force your brain to work in a way that it is not used to, which is a really cool thing to happen in a creative sense but also in a technical sense.

We aren't used to seeing anything with non-uniform time. Whenever we're watching a video, we want to find a point in the video, then we see the whole image rewind or fast forward. Of course that makes sense in a lot of situations, but there could be interesting use-cases for interactive non-uniform time.

What makes ATLAS an ideal place for this type of research?

There's all the people, whether it's research faculty who are interested in asking questions like, “How can we make a novel system or improve research that's going on in this area?” Or it's super strong technical expertise, which is like, “Let's have a go at making this work in a projection environment.”

What’s next for the Proteus project?

At the moment, I can only compare by changing the time on a region of space and I can compare a region of space against another region at different times. But it might be interesting to be able to break the image and say, “Actually, I want to clone this region and see it from a different time period.” Can I reconstitute the image in some way?

Proteus will be running in the B2 Black Box Studio as part of:

ATLAS Research Open Labs

Roser ATLAS Center

October 10, 2025

3-5pm

FREE, no registration needed