RealitySketch introduces a new way to embed dynamic and responsive graphics in the real world. Recently, an increasing number of AR sketching tools have enabled users to draw and embed sketches in the real world with sketched contents that are inherently static, floating in mid-air without responding to the real world.

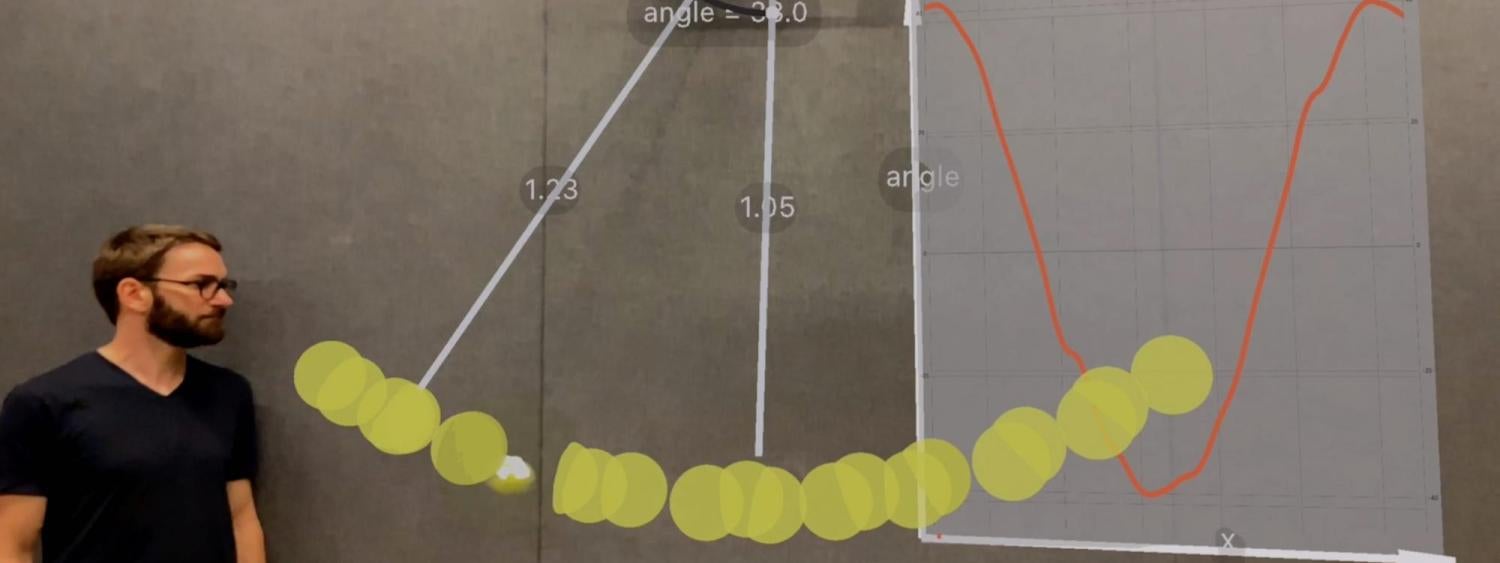

In RealitySketch, the user draws graphical elements on a mobile AR screen and binds them with physical objects; the sketched elements can then be dynamically controlled in real-time with the corresponding physical motion. The user can also quickly visualize and analyze real-world phenomena through responsive graph plots or interactive visualizations. This technology is part of a body of research aimed at expanding interaction techniques available for capturing, parameterizing and visualizing real-world motion without pre-defined programs and configurations. A handful of application scenarios appear toward the end of the video, including physics education, sports training and in-situ tangible interfaces.

THING Lab RealitySketch Website