Redefining Classroom AI Capabilities: Strand 1

We last left our intrepid Strand 1 researchers on the precipice of the incoming classroom data from our cross-strand data collection team. As this new data comes in, the Strand 1 team spent the quarter facing a new challenge—how exactly will we use this data to define the capabilities of our AI Partner?

What Can Our AI Partner Hear & Understand?

Before our future AI Partner can help facilitate small group classroom collaborations, it’ll need to recognize what students are saying. Strand 1’s Speech Recognition team was hard at work this quarter to help make this a possibility. This group of researchers selected five sessions of datasets collected from various Denver Public Schools and St. Vrain Valley Schools middle school classrooms. The team manually transcribed and annotated mark times when each student was speaking (to the nearest 0.1 sec). These data are being used to evaluate both background filter performance and diarization

performance using Automatic Speech Recognition (ASR) — the process of automatically transcribing audio input.

To facilitate this work, the team installed the recently released SpeechBrain system and provided samples of speech from each target student. The team then used the novel approach of having the system filter out from these sample segments that do not contain speech from any of the target speakers.

The group will continue the current work on filtering background noise and tuning Google ASR for optimum performance, including providing language model context. They will also be augmenting the existing hardware testing pipeline and building a similar pipeline compatible with cameras.

In conjunction with the Speech Recognition team, the Annotation Working Group and the Content Analysis team are also piloting other types of annotation of the students’ utterances. These include a straightforward on-topic/off-topic classification, more Abstract Meaning Representations (AMRs), and a layer of Rhetorical Structure Theory (RST). In addition, Strand 1 is engaged in developing a new AMR parsing model that will encompass this new, preliminary RST (RST) annotation. Experimental results are expected soon! To parse the student dialogues from our classroom datasets, the team is also working on a few shots learning model—an object categorization machine learning model that aims to learn information about object categories from a handful, rather than hundreds or thousands, of training samples. The teams’ best AMR parsing results so far have been an 81.4% accuracy rate on a medium sized model, and they hope to test larger AMR parser models in the coming weeks.

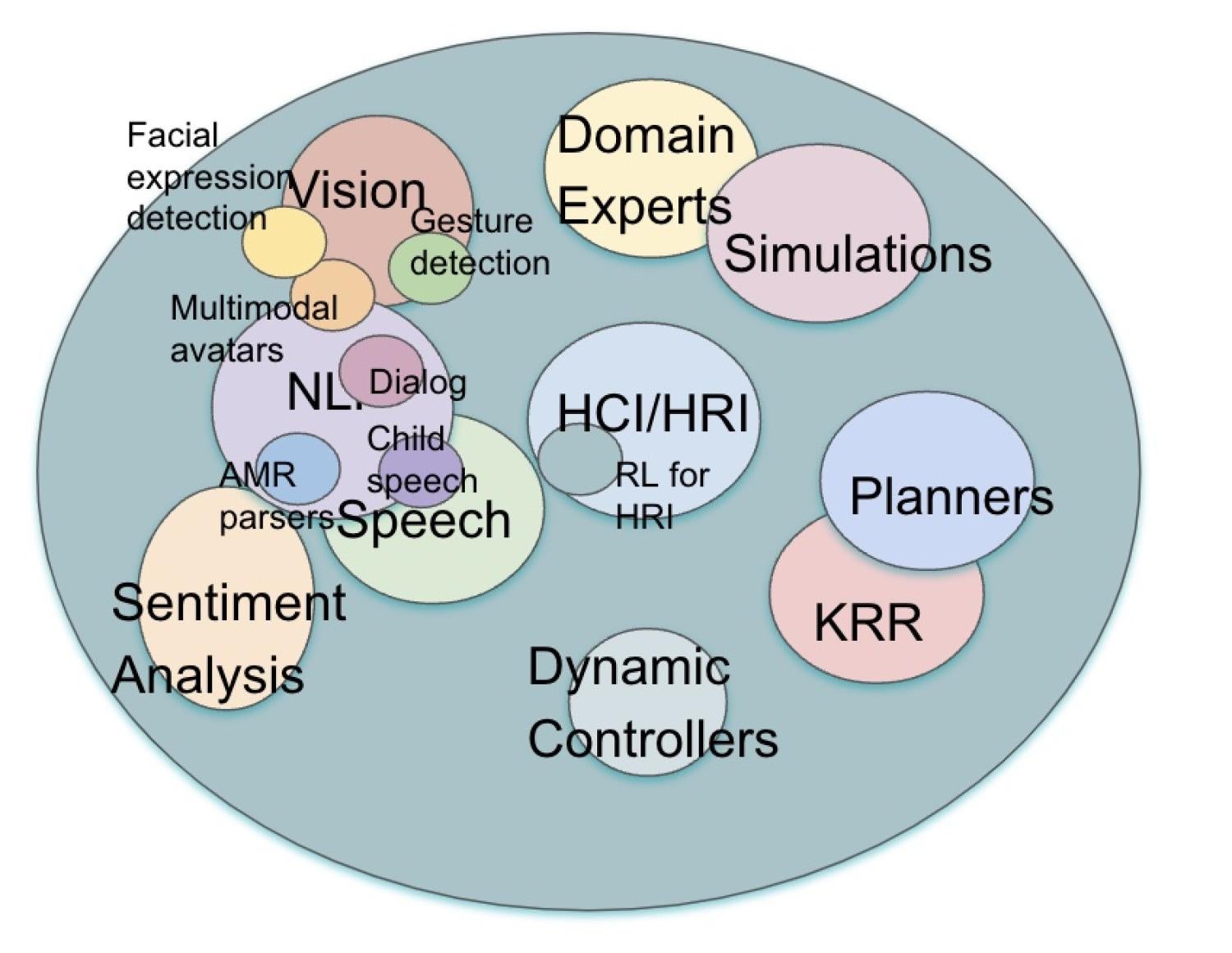

Recent Strand 1 advances in AI capabilities

Our Dialogue Management team was also hard at work helping our future AI Partner understand student speech. This quarter, the team worked on two dialogue systems surveys: one that describes current dialogue models and architecture, and another focused on designing human-centered dialogue systems. The latter explicitly recognizes the different stake-holders in the dialogue process and focuses on the areas of end-user needs, design values, and data collection as well as an evaluation of these systems.

What Can Our AI Partner Understand & Do?

As several teams work toward creating the ears and eyes for the Partner, other Strand 1 teams—with the help of Strands 2 and 3—are working to help the Partner understand what to do with this information. The Annotation team worked toward creating annotations that will help our AI Partner detect which students are working toward the assigned task and which students are off task.

On-task students might be talking about the content of the class, for example, or they might be talking through a group assignment. The group also hopes to annotate “attentiveness” and “mind-wandering,” as well as students who are moving the conversation forward. As their work progresses, the Annotation team will work closely with Strand 3 for guidance on socially relevant annotations, such as inclusive speech versus harmful speech.

While the Annotation group strives to help our AI Partner understand what constitutes on-topic and off-topic behavior, the Reinforcement Learning (RL) — a machine learning paradigm that allows AI agents to learn how to take actions (i.e., learn policies) in an environment by interacting with it or by observing others’ interactions — team focuses on enabling the AI Partner to learn and improve discourse policies from real interactions in the classroom. The team works closely with our Strand 2 experts on Collaborative Problem Solving skills to understand which policies will help the AI Partner learn. During this quarter, the RL team developed a new RL approach that enables them to teach a single agent to learn a basket of policies instead of only one. In the coming quarters, the team hopes to adapt their approach to the classroom with the goal of seeing if they can tune the AI Partner’s behavior on the fly to match teachers’ changing objectives.