Publication

Humans learn how and when to apply forces in the world via a complex physiological and psychological learning process. Attempting to replicate this in vision-language models (VLMs) presents two challenges: VLMs can produce harmful behavior, which is particularly dangerous for VLM-controlled robots which interact with the world, but imposing behavioral safeguards can limit their functional and ethical extents. We conduct two case studies on safeguarding VLMs which generate forceful robotic motion, finding that safeguards reduce both harmful and helpful behavior involving contact-rich manipulation of human body parts. Then, we discuss the key implication of this result--that value alignment may impede desirable robot capabilities--for model evaluation and robot learning.

Humans learn how and when to apply forces in the world via a complex physiological and psychological learning process. Attempting to replicate this in vision-language models (VLMs) presents two challenges: VLMs can produce harmful behavior, which is particularly dangerous for VLM-controlled robots which interact with the world, but imposing behavioral safeguards can limit their functional and ethical extents. We conduct two case studies on safeguarding VLMs which generate forceful robotic motion, finding that safeguards reduce both harmful and helpful behavior involving contact-rich manipulation of human body parts. Then, we discuss the key implication of this result--that value alignment may impede desirable robot capabilities--for model evaluation and robot learning. Vision language models (VLMs) exhibit vast knowledge of the physical world, including intuition of physical and spatial properties, affordances, and motion. With fine-tuning, VLMs can also natively produce robot trajectories. We demonstrate that eliciting wrenches, not trajectories, allows VLMs to explicitly reason about forces and leads to zero-shot generalization in a series of manipulation tasks without pretraining.

Vision language models (VLMs) exhibit vast knowledge of the physical world, including intuition of physical and spatial properties, affordances, and motion. With fine-tuning, VLMs can also natively produce robot trajectories. We demonstrate that eliciting wrenches, not trajectories, allows VLMs to explicitly reason about forces and leads to zero-shot generalization in a series of manipulation tasks without pretraining. This article reviews contemporary methods for integrating force, including both proprioception and tactile sensing, in robot manipulation policy learning. We conduct a comparative analysis on various approaches for sensing force, data collection, behavior cloning, tactile representation learning, and low-level robot control.

This article reviews contemporary methods for integrating force, including both proprioception and tactile sensing, in robot manipulation policy learning. We conduct a comparative analysis on various approaches for sensing force, data collection, behavior cloning, tactile representation learning, and low-level robot control. Estimating the location of contact is a primary function of artificial tactile sensing apparatuses that perceive the environment through touch. Existing contactlocalization methods use flat geometry and uniform sensor distributions as a simplifying

Estimating the location of contact is a primary function of artificial tactile sensing apparatuses that perceive the environment through touch. Existing contactlocalization methods use flat geometry and uniform sensor distributions as a simplifying Robot trajectories used for learning end-to-end robot policies typically contain end-effector and gripper position, workspace images, and language. Policies learned from such trajectories are unsuitable for delicate grasping, which require tightly

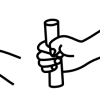

Robot trajectories used for learning end-to-end robot policies typically contain end-effector and gripper position, workspace images, and language. Policies learned from such trajectories are unsuitable for delicate grasping, which require tightly Large language models (LLMs) can provide rich physical descriptions of most worldly objects, allowing robots to achieve more informed and capable grasping. We leverage LLMs’ common sense physical reasoning and code-writing abilities to infer an

Large language models (LLMs) can provide rich physical descriptions of most worldly objects, allowing robots to achieve more informed and capable grasping. We leverage LLMs’ common sense physical reasoning and code-writing abilities to infer an Shape displays that actively manipulate surface geometry are an expanding robotics domain with applications to haptics, manufacturing, aerodynamics, and more. However, existing displays often lack high-fidelity shape morphing, high-speed deformation

Shape displays that actively manipulate surface geometry are an expanding robotics domain with applications to haptics, manufacturing, aerodynamics, and more. However, existing displays often lack high-fidelity shape morphing, high-speed deformation We describe a force-controlled robotic gripper with built-in tactile and 3D perception. We also describe a complete autonomous manipulation pipeline consisting of object detection, segmentation, point cloud processing, force-controlled manipulation

We describe a force-controlled robotic gripper with built-in tactile and 3D perception. We also describe a complete autonomous manipulation pipeline consisting of object detection, segmentation, point cloud processing, force-controlled manipulation Peg-in-hole assembly of tightly fitting parts often requires multiple attempts. Parts need to be put together by performing a wiggling motion of undetermined length and can get stuck, requiring a restart. Recognizing unsuccessful insertion attempts

Peg-in-hole assembly of tightly fitting parts often requires multiple attempts. Parts need to be put together by performing a wiggling motion of undetermined length and can get stuck, requiring a restart. Recognizing unsuccessful insertion attempts Sensory feedback provided by prosthetic hands shows promise in increasing functional abilities and promoting embodiment of the prosthetic device. However, sensory feedback is limited based on where sensors are placed on the prosthetic device and has

Sensory feedback provided by prosthetic hands shows promise in increasing functional abilities and promoting embodiment of the prosthetic device. However, sensory feedback is limited based on where sensors are placed on the prosthetic device and has