Building CLIP From Scratch

by Matt Nguyen

Open World Object Recognition on the Clothing MNIST Dataset

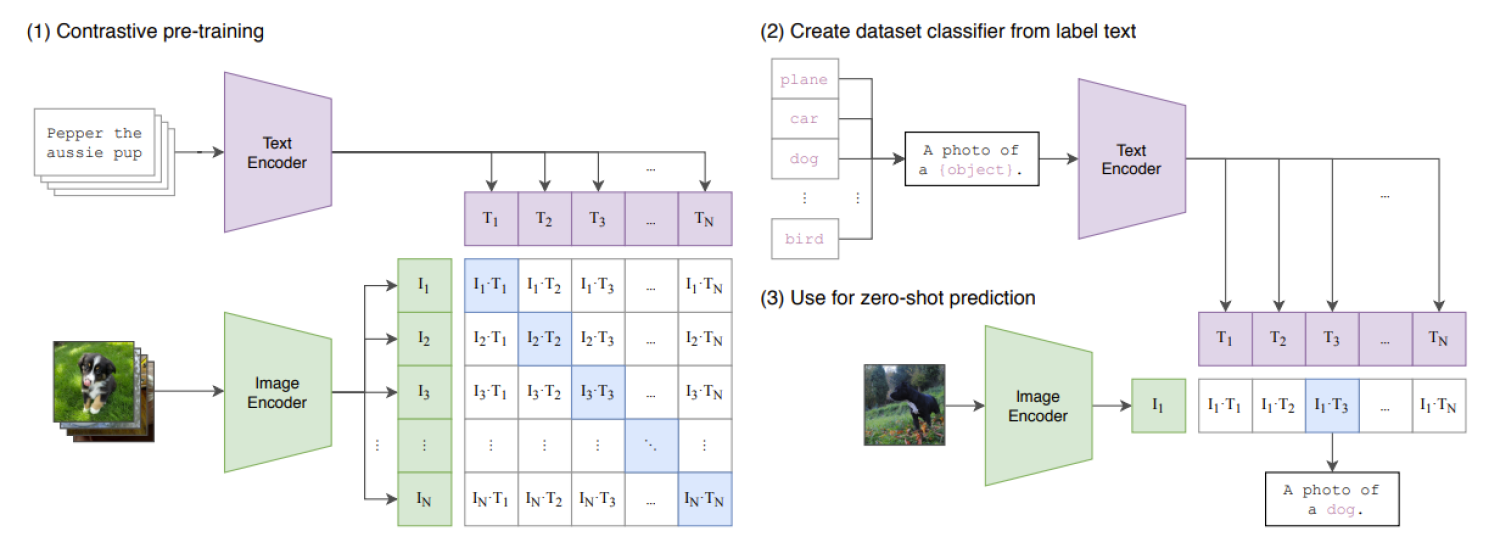

Computer vision systems were historically limited to a fixed set of classes, CLIP has been a revolution allowing open world object recognition by “predicting which image and text pairings go together". CLIP is able to predict this by learning the cosine similarity between image and text feature for batches of training data. This is shown in the contrastive pre-training portion of Figure 1 where the dot product between the image features {I_1 … I_N} and the text features {T_1 … T_N} is taken.

In this tutorial, we are going to build CLIP from scratch and test it on the fashion MNIST dataset. Some of the sections in this article are taken from my vision transformers article. Notebook with the code from this tutorial can be found here.