Is Open World Vision in Robotic Manipulation Useful?

by Uri Soltz

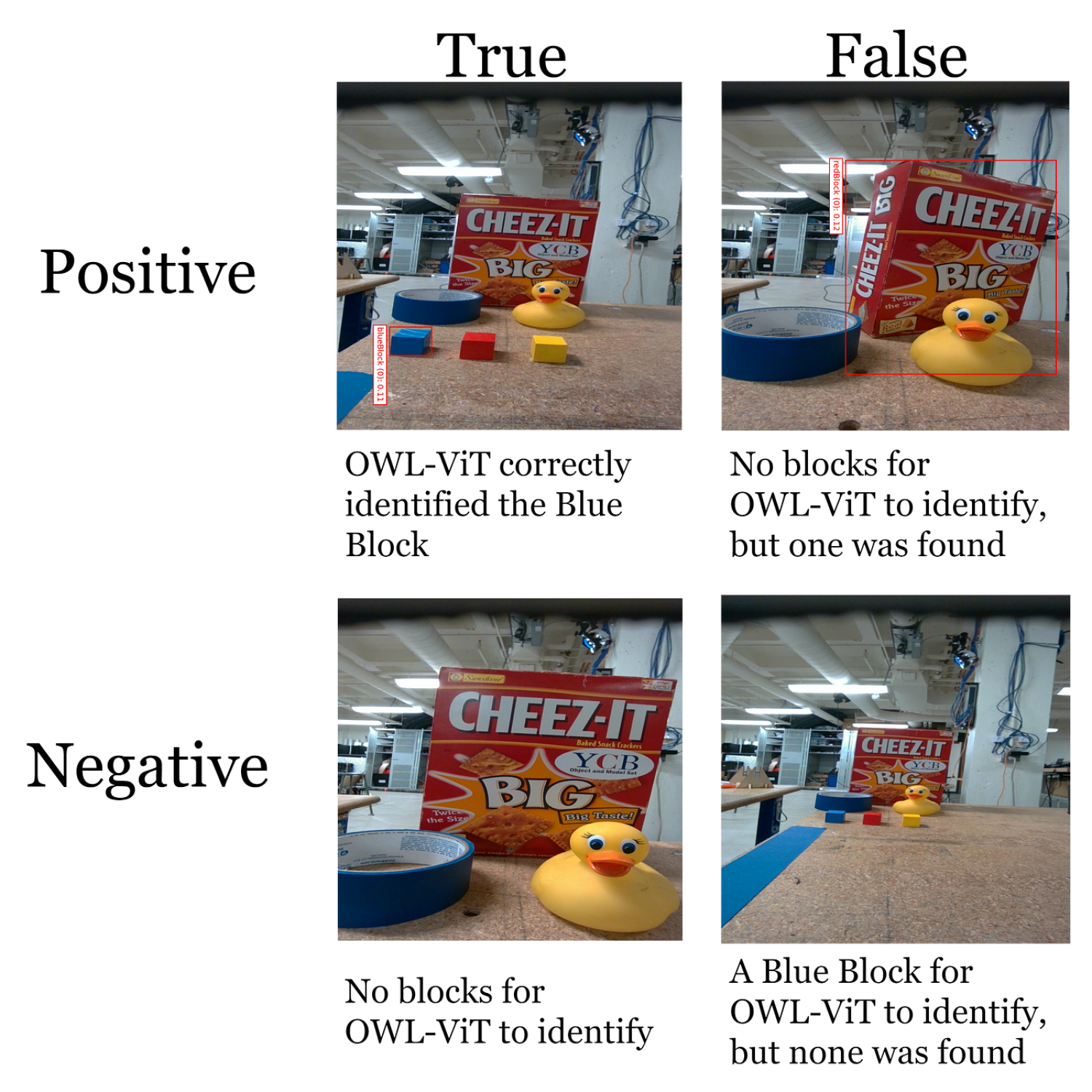

Google’s Open World Localization Visual Transformer (OWL-ViT) in combination with Meta’s “Segment Anything” has emerged as the goto pipeline for zero-shot object recognition — none of the objects have been used in training the classifier — in robotic manipulation. Yet, OWL-ViT has been trained on static images from the internet and has limited fidelity in a manipulation context. OWL-ViT returns a non-negligible confusion matrix and we show that processing the same view from different distances significantly increases performance. Still, OWL-ViT works better for some objects than for others and is thus inconsistent. Our experimental setup is described in Exploring MAGPIE: A Force Control Gripper w/ 3D Perception, by Streck Salmon.