Reasoning About Uncertainty using Markov Chains

Formal methods to tackle “Trial-and-Error” problems

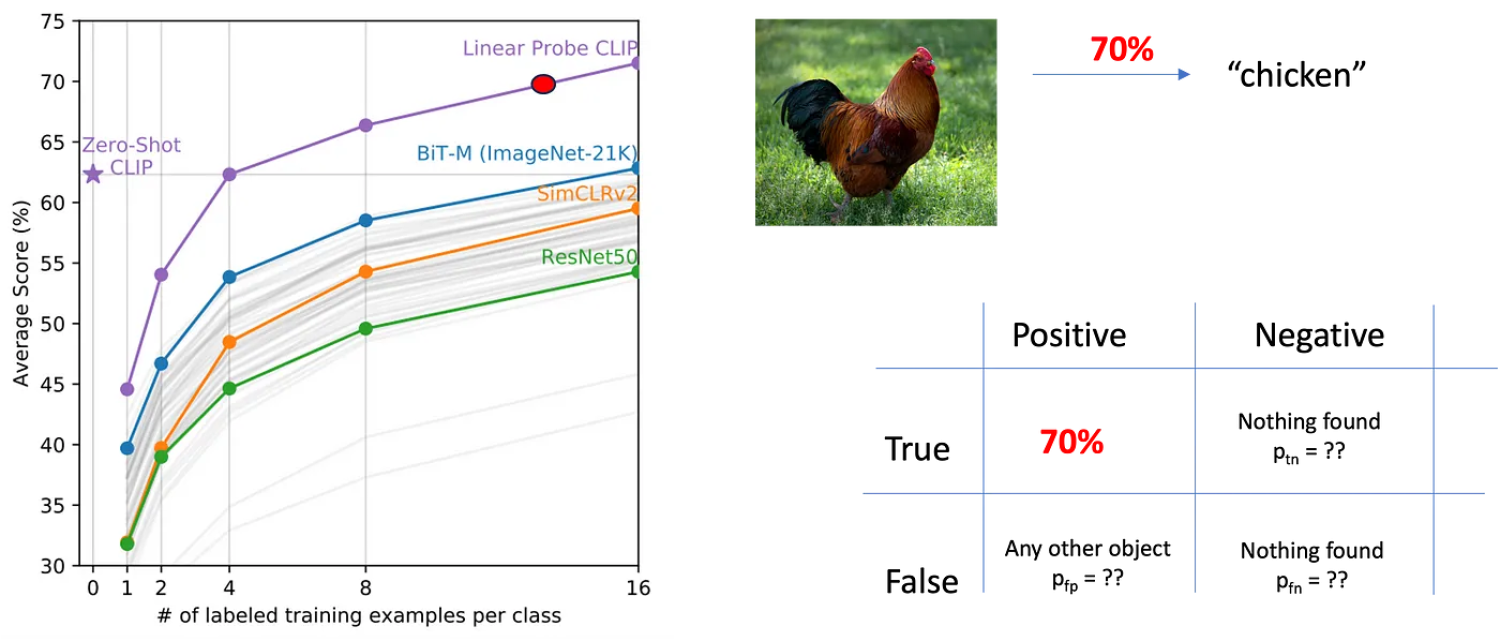

The ability to deal with unseen objects in a zero-shot manner makes machine learning models very attractive for applications in robotics, allowing robots to enter previously unseen environments and manipulating unknown objects therein.

While their accuracy in doing so is incredible compared with was conceivable just a few years ago, uncertainty is not only here to stay, but also requires a different treatment than customary in machine learning when used in decision making.

This article describes recent results on dealing with what we call “trial-and-error” tasks and explain how optimal decisions can be derived by modeling the system as a continuous-time Markov chain, aka Markov Jump Process.

Categories: Blog